Animating in the Audio Domain [sound, code, synesthesia]

There are several situations when accurate event scheduling is needed. In a few of my recent projects, i was facing a problem continuously: how to keep things synched and launch events and animations really accurately. These projects are mainly audio based apps, so timing has become a key problem. An example is the case of sequencing the sounds in Shape Composer, where different “playheads” with different speeds and boundaries are reading out values from the same visual notation, while the user modifies them. It is not that simple to keep everything synched even when the user changes all the parameters of a player.

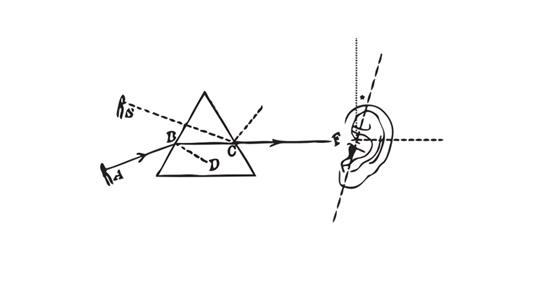

As I am arriving to native application development and user interface design from the world of visual programming languages, i decided to port a few ideas and solutions that worked for me really well, this post is one of the first in these series. In data flow languages for rapid prototyping, there are a lot of interesting unique solutions, that might also be useful in text based, production-ready environments (especially for those people who have mindsets that are originating from non-text based programming concepts). A nice example should be the concept of spreads in vvvv , or audio rate controls in Pure Data . In pure data, the name says all: anything is data, even the otherwise complicated operations on the audio signals can be used easily in other domains (controlling events, image generation, simulations, etc). While using the [expr~] object , one can operate audio signal vectors easily, but there is also a truly beautiful concept on playing back sounds: you read out sound values from a sound buffer using another sound. The values of a simple sound wave are used to iterate over the array.

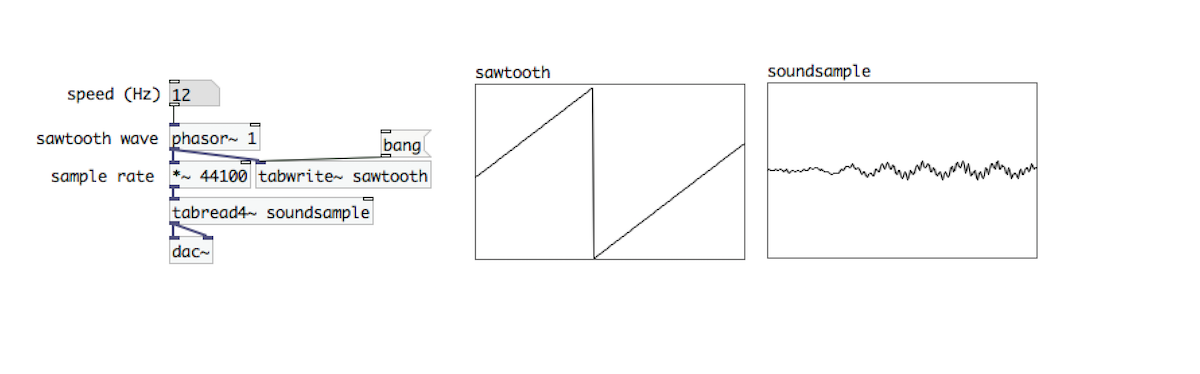

On the image above, there is an example how to use a saw wave, a [phasor~] object to read out values from an array using the [tabread4~] object. The phase (between 0-1, multiplied by the sample rate, aka 44100) is reading out individual sample values from the array. As you shift the phase, you iterate over the values. This is the “audio-equivalent” of a for loop. The amplitude of the saw wave is becoming the length of the actual loop slice while the frequency of the saw wave becomes the actual pitch of the played back sound. The phase of the saw wave is the actual position in the sound sample. This method of using audio to control another audio is one of the beautiest underlying concepts of Pure Data and this concept can be extended well into other domains, as well.

See these animations in movement here

The Accurate Timing example that is, this post might be about is trying to bring this concept into other languages where you can access the dsp cycle easily. With OpenFrameworks it is easy to call functions within this cycle. In fact, anything you put in ofSoundStream’s audioOut() function, will be called as frequently as your selected audio sampling rate. Usually this is 44.1 kHz, which means you can call your function 44100 times per second which might be way more sophisticated than making the call in an update() or draw() function. The audio thread is keeping time, even if your application’s frame rate is jumping around or dancing like a higgs. It might be useful to keep time based events in a separate, trustable thread, and the audio thread is just perfect for this occasion. This example is using a modified version of an oscillator class that is available at the AVSys Audio-Visual Workshop Github repository , which is used to control the events that are visible on the screen.

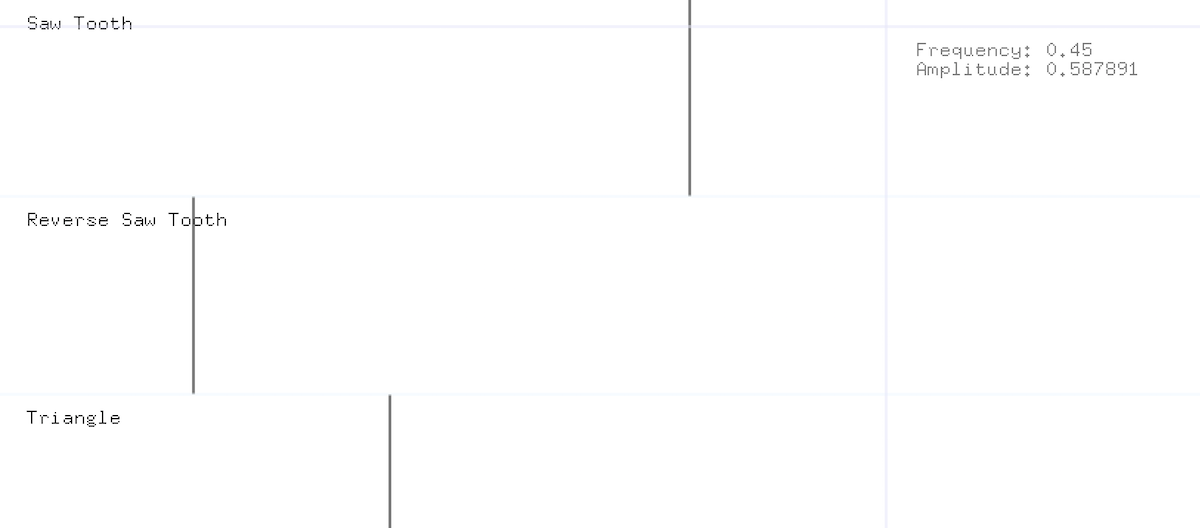

When building things where time really matters (such as sequencers, automated animations or other, digital choreography), a flexible, accurate “playback machine” can become really handy. The concept in this example is just a simple way of mapping one domain to another: the phase of a wave can be applied to a relative position of the actual animated value, while the amplitude of this wave represents the boundaries of the changing value. Finally, the frequency is representing the speed. Even though, there might be other really interesting ways of animating time sensitive artefacts, the one that is using the concept and attributes of sound (and physical waves) can be truly inspiring to develop similar ideas and connecting concepts between previously isolated domains.

The source for this post is available at: Audio Rate Animations on Github

InBetween States [Sound, Process, Notation]

“Varying combination of sounds and “non-sounds” (silence) through time, sounding forms (Eduard Hanslick), time length pieces (John Cage). These definitions of music are referring to both a concrete and a non physical presence that are holding the vision of a special space-time constellation. The process of investigating the distribution of sounds is leading us to the magical state of the musical thought. Waiting, along the observation procedure are constructing an extension of the listeners traditional relationship with music.

What states might a musical thought have? How does interpretation is taking part in the process? How does representation affected? What are the bases of the musical experience? The exhibition is seeking to find these “in-between” states through the works of the New Music Studio , and students of Faculty of Music and Visual Arts University of Pécs and - of course - the presence of the visitors.” (from the exhibition catalogue)

Exhibition Space (2B Gallery)

New Music Studio is a group of musicians formed in the seventies in Budapest. They were focusing on some kind of methodology and philosophy of the contemporary music scene that was different from the canonized mainstream. They brought “cageian” influences and the legacy of fluxus to the regions of eastern european society. The main founders of the group ( Laszlo Sary, Laszlo Vidovszky,Zoltan Jeney, Peter Eötvös, Zoltán Kocsis, András Wilheim et al) amongst other creative minds produced several interesting pieces that were questioning traditional musical interpretation methods, notation, performance and the articulation of musical expressions. Their legacy is hard to interpret hence the large amount of their pieces. These neither fit in the traditional performative space, also difficult to adjust to gallery spaces.

The exhibition is a truly great reflection to this situation: installations, homage works and cross references are introduced to the audience in order to shed light on most of these problematic aspects. The tribute works of the next generation are also deeply experimental and fresh pieces that are using post digital concepts the aesthetics of raw, ubiquitous, programmable electronics where people can focus on content and aural space instead of regular computers and the like. The presented spatial sound installations find their place perfectly in the gallery space. The collected documents and visual landmarks of the exhibition are giving hints on very interesting topics without the need for completeness which is a good starting point to dive into this world deeper. The exhibition is curated by Reka Majsai.

Hommage á Autoconcert (Balázs Kovács & students from University of Pécs)

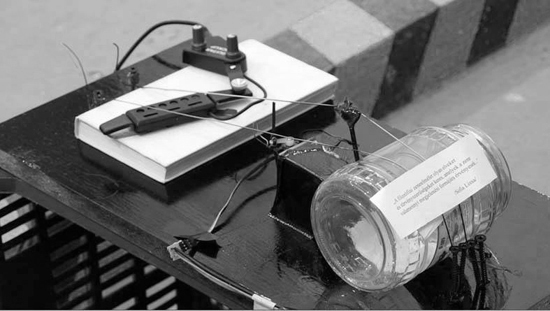

“Hommage á Autoconcert” by Balazs Kovacs (xrc) [link] & students from the University of Pécs is a sound installation that is constructed of several found objects and instruments. It is a tribute to the so called “Autoconcert” (1972) by Laszlo Vidovszky. The original piece has a diverse set of found objects that are falling from above over different time intervals during the performance. The present installation is dealing with the sound events in a reproducible way: the objects are not falling, but get hit by small sticks from time to time. These events are constructing a balanced aural space where some tiny noise, amplified strings and colliding cymbals can be heard as some acoustic background layer. The sticks are moved by little servo motors, the logic is controlled by a few arduino boards .

Repeating and overlapping of three musical processes (Gábor Lázár)

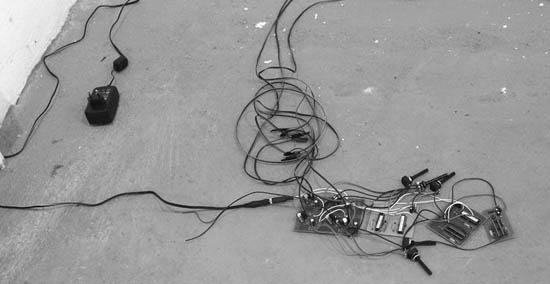

“Repeating and overlapping of three musical processes” by Gábor Lázár is also a sound installation made with custom micro controllers and three speakers set in plastic glasses as acoustic resonators. The processes are based on ever-changing time manipulations between musical trigger events. Dividing time within these in-between temporal events cause ever-increasing pitch shifting in the auditory field. The overall sound architecture forms a very saturated and diverse experience.

Visual Notations (New Music Studio)

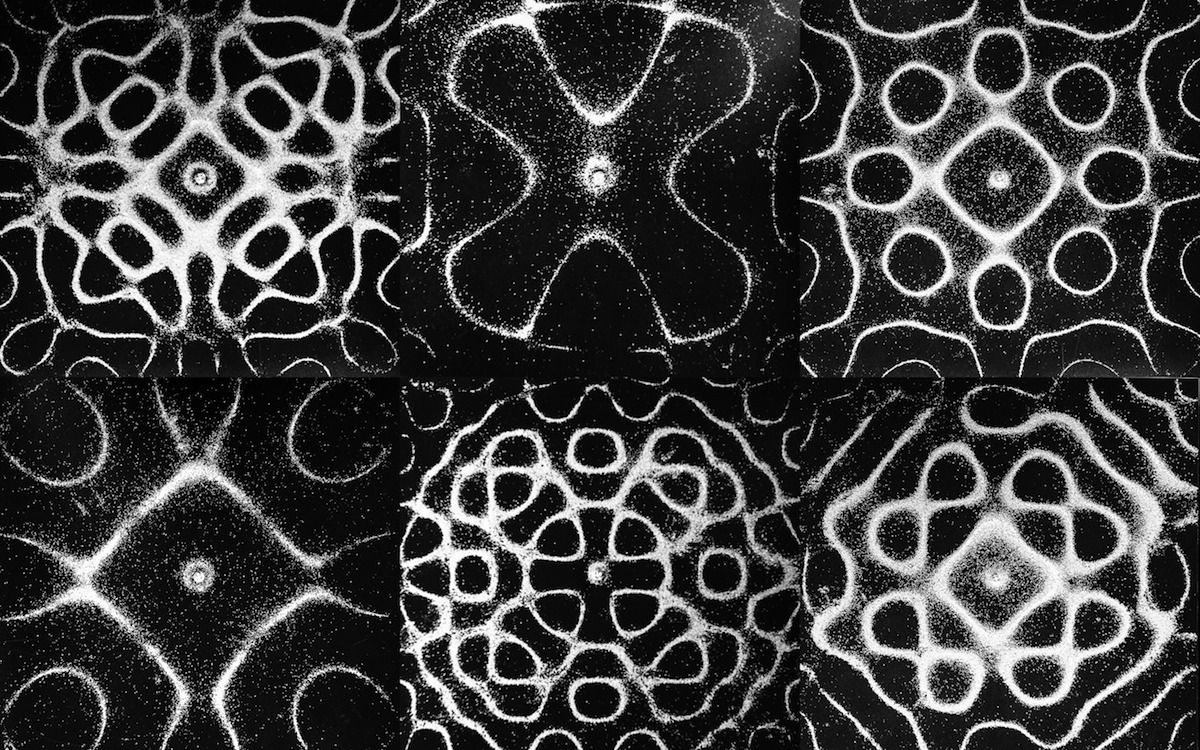

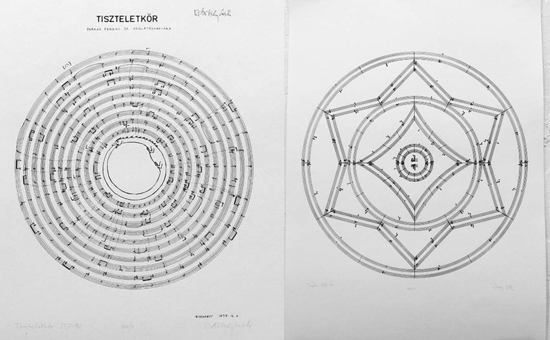

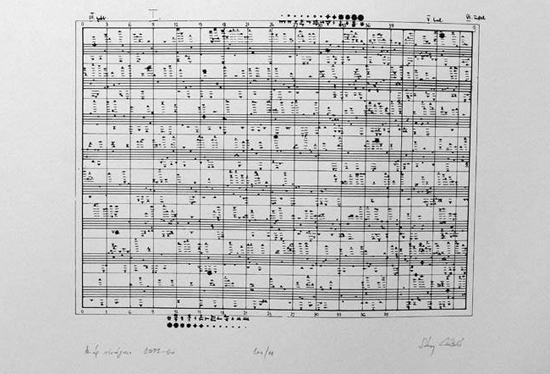

“Visual Notations” by the New Music Studio members themselves are in fact several notation pieces crafted in a beautiful way. Varying nonstandard notation techniques are introduced here: using the graphical alignment of stellar constellations (Flowers of heaven, Sary), spiral like, self-eating dragon (Lap of honour, Vidovszky), ancient hindu symbols (Yantra, Jeney) are all taking part in the semantics and development in these sounding images.

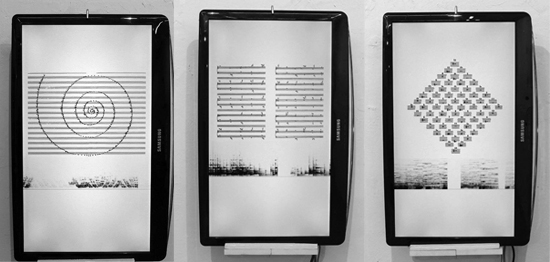

Three pieces by Sáry (reinterpretation & software by Agoston Nagy)

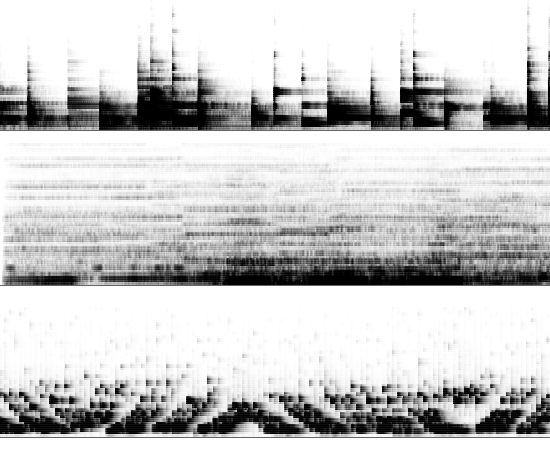

There is also an installation I’ve been involved with: interpreting three pieces by Laszlo Sary. The triptych is letting the observer see and hear the inner sound structures at the same time. The upper region of the screen is displaying the original notation, this can be referred to the piece as a spatial constellation of the sounds to be played. Each work has different notations and different instructions on how to play the notes. The bottom region is displaying a live spectrogram of the actual sound. This is showing a temporal distribution of the events which has different characteristics with each piece.

Musical Spectra of the three different pieces by Sáry

“Sunflower” (1989, based on Snail Play, 1975) has a spiral-like spatial distribution in the sheet, these patterns are clearly visible in the musical spectra: infinite ladders are running up and down as the musicians are playing on three percussive marimbas. “Sounds for” (1972) is based on some predefined notes that can be accessed by the performer in any order they prefer. Larger, distant, sudden triggers are defining a more strict, cityscape-like spectra. The final piece, “Full Moon” (1986) is based on a permutation procedure [link]: the individual notes are played back simultaneously by four (or more) musicians at the same time. Their individual routes are defining the final constellation of sounds. The visible spectra is like a continuous flow, where soft pitch intonations are in focus against the previous two, rhythm oriented works. The setup is made with free & open source tools, using OpenFrameworks on three raspberry pi boards.

Images of the exhibition opening

It is hard to describe all the aspects of the exhibited works and the legacy of the New Music Studio, as their works had many local influences in the East European region at their time. The states of “in-between-ness” however are clearly recognizable on several layers of the whole event: the political and cultural influences of the original artists, the continuous phase shift between the musical and the visual domain, the tribute works where the creators are halfway in-between authors and interpreters. The exhibition is all together a really important summary of a niche cultural landmark in the Hungarian and international contemporary music scene.

Thanks for Gabor Toth for the photos.

Tempered Music Experiments [theory, music, 2d space]

While trying out different ways for representing musical structures on an interactive 2d surface I made some experiments as part of my thesis and client works. Three systems were created as “side effects” for my current research interests:

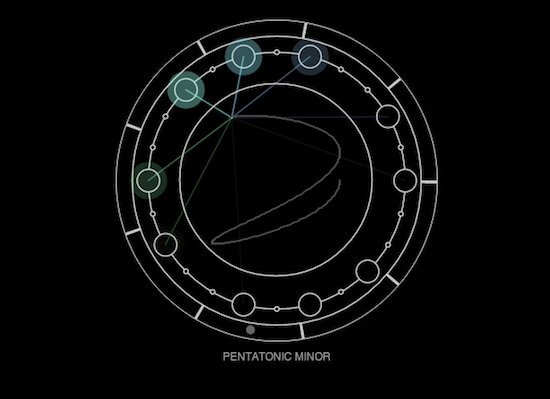

“Harmonizer” is a simple musical instrument for studying traditional scales through harmonic movements. Traditional scales are the basis of folk songs, jazz, classical music, including modal scales, pentatonic, different minor / major structures. Harmonic motion is based on simple trigonometric pendulums (sinus, cosines, etc) that describes really organic motions. The goal of Harmonizer is to collide these two concepts and see what happens.

Then, there is the more chaotic sounding “Fragmenter”. It is “temporally tempered”: a sound sample is cut up automatically into slices based on onset events. These snippets are then mapped to different elements in space. The observer can listen through them by simply drawing over them. The drawing gesture is repeating itself so every unique gesture creates a completely different loop.

The last experiment is a “Verlet Music” machine where freehand drawings are being sonified with an elastic string. The physical parameters of the string can be altered by dragging it around on the surface. The string is tuned to a quasi comfortable scale, each dot on the string has a unique pitch. When they come over a certain shape, they become audible.

You can try out Harmonizer here . The synthesis is built with WebPd using WebAudio API, sound might not work clearly on slower machines.

PolySample [Pure Data]

I’ve been involved in a few projects recently where simultaneous multiple sound playback was needed. Working in the projects Syntonyms ( with Abris Gryllus and Marton Andras Juhasz ), SphereTones ( with Binaura ), and No Distance No Contact ( with Szovetseg39 ) repeatedly brought me the concept of playing overlapping sound samples. This means when you need to playback a sample and start playing it back again, before the previous instance has been finished. The Pure Data musical environment is really useful for low level sound manipulation, can be tweaked towards specific directions. I was searching the web for some solutions, but still didn’t find a really simple polyphonic sound sampler with adjustable pitch that is capable to play back multiple instances of the same sound if needed. For instance if you need to play long, resonating, vibrating sounds, it is useful to let the playback of the previous one finishing, so the next sound doesn’t interrupt it, but there are two instances of the same sound, both of them left decaying smooth & clear. Like raindrops or percussions, etc.

As a starting point I found myself again in the territories of dynamic patching. As you see on the video ( which is an older pd experiment of mine ) there are things that can be left to pd itself. Creating, deleting, connecting objects can be done quite easily by sending messages to pd itself. With PolySample, once the user creates a [createpoly] object, it automatically creates several samplers in itself. The number of generated samplers can be given as a second argument to the object. First argument is the name of the sound file to be played back. There is an optional third argument for changing the sampling rate ( which is 44100 by default ). It is useful when working with libPd and the like, where different devices and tablets operate on a lower sampling rate. So creating a sampler can be invoked as

[ createPoly < sound filename > < numberOfPolyphony > < sample rate > ]

The first inlet of the object accepts a list: the first element is the pitch, the second element is the volume of the actual sample to be played. This is useful when trying to mimic string instruments or really dynamic range of samples. This volume sets the initial volume of each sample ( within the polyphony ), whereas the second inlet accepts a float for controlling the overall sound, as a mixer.

You can create as many polyphonic samplers as you wish ( keep in mind that smaller processors can be freaked out quite quickly if you create tons of sample buffers at the same time ). The project is available at Github, here.

Built with Pd Vanilla, should work with libPd, webPd, RJDJ and other embeddable Pd projects.

Javascript Sound Experiments - Spectrum Visualization [ProcessingJS + WebAudio]

This entry is just a quick add-on for my ongoing sound related project that maybe worth sharing. I came up with the need of visualizing sound spectrum. You might find a dozen of tutorials on the topic, but I didn’t think that it is so easy to do with processingJS & Chrome’s WebAudio Api. I found a brilliant and simple guide on the web audio analyser node here . This is about to make the visuals with native canvas animation technique. I used the few functions from this tutorial and made a processingJS port of it. The structure of the system is similar to my previous post on filtering:

1. Load & playback the sound file with AudioContext (javascript)

2. Use a function to bridge native javascript to processingJS (pass the spectrum data as an array)

3. Visualize the result on the canvas

Load & playback the sound file with AudioContext (javascript)

We are using a simpler version of audio cooking within this session compared to the previous post. The SoundManager.js file simply prepares our audio context, loads and plays the specified sound file once the following functions are called:

initSounds();

startSound('ShortWave.mp3');

We call these functions when the document is loaded. That is all about sound playback, now we have the raw audio data in the speakers, let’s analyze it.

Use a function to bridge native javascript to processingJS

There is a function to pass the spectrum data as an array, placed in the head section of our index.html:

function getSpektra()

{

// New typed array for the raw frequency data

var freqData = new Uint8Array(analyser.frequencyBinCount);

// Put the raw frequency into the newly created array

analyser.getByteFrequencyData(freqData);

var pjs = Processing.getInstanceById('Spectrum');

if(pjs!=null)

{

pjs.drawSpektra(freqData);

}

}

This function is based on the built-in analyzer that can be used with the WebAudio API. the “freqData” object is an array that will contain all the spectral information we need (1024 spectral bins by default) to be used later on. To find out more on the background of spectrum analysis, such as Fourier transformation, spectral bins, and other spectrum-related terms, Wikipedia has a brief introduction.

However we don’t need to know too much about the detailed backgrounds. In our array, the numbers are representing the energy of the sounds from the lowest pitch to the highest pitch in 1024 discrete steps, all the time we call this function (it is a linear spectral distribution by default). We use this array and pass to our processing sketch within the getSpectra() function.

Visualize the result on the canvas

From within the processing code, we can call this method any time we need. We just have to create a JavaScript interface to be able to call any function outside of our sketch (as shown in the previous post, also):

interface JavaScript

{

void getSpektra();

}

// make the connection (passing as the parameter) with javascript

void bindJavascript(JavaScript js)

{

javascript = js;

}

// instantiate javascript interface

JavaScript javascript;

That is all. Now we can use our array that contains all the spectral information by calling the getSpectra() function from our sketch:

// error checking

if(javascript!=null)

{

// control function for sound analysis

javascript.getSpektra();

}

We do it in the draw function. The getSpectra() is passing the array to processing in every frame, so we can create our realtime visualization:

// function to use the analyzed array

void drawSpektra(int[] sp)

{

// your nice visualization comes here...

fill(0);

for (int i=0; i<sp.length; i++)

{

rect(i,height-30,width/sp.length,-sp[i]/2);

}

}

As usual, you can download the whole source code from here as well as try out before using it with the live demo here. Please note, the demo currently works with the latest stable version of Google Chrome.