Animating in the Audio Domain [sound, code, synesthesia]

There are several situations when accurate event scheduling is needed. In a few of my recent projects, i was facing a problem continuously: how to keep things synched and launch events and animations really accurately. These projects are mainly audio based apps, so timing has become a key problem. An example is the case of sequencing the sounds in Shape Composer, where different “playheads” with different speeds and boundaries are reading out values from the same visual notation, while the user modifies them. It is not that simple to keep everything synched even when the user changes all the parameters of a player.

As I am arriving to native application development and user interface design from the world of visual programming languages, i decided to port a few ideas and solutions that worked for me really well, this post is one of the first in these series. In data flow languages for rapid prototyping, there are a lot of interesting unique solutions, that might also be useful in text based, production-ready environments (especially for those people who have mindsets that are originating from non-text based programming concepts). A nice example should be the concept of spreads in vvvv , or audio rate controls in Pure Data . In pure data, the name says all: anything is data, even the otherwise complicated operations on the audio signals can be used easily in other domains (controlling events, image generation, simulations, etc). While using the [expr~] object , one can operate audio signal vectors easily, but there is also a truly beautiful concept on playing back sounds: you read out sound values from a sound buffer using another sound. The values of a simple sound wave are used to iterate over the array.

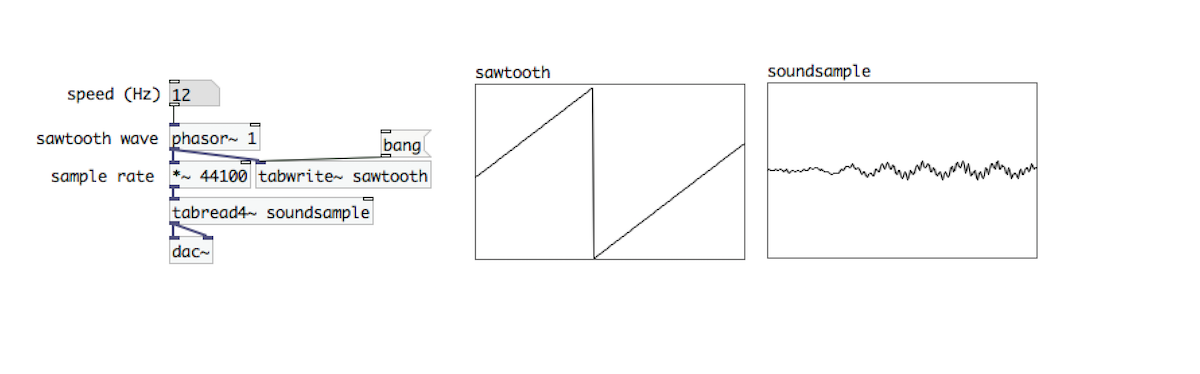

On the image above, there is an example how to use a saw wave, a [phasor~] object to read out values from an array using the [tabread4~] object. The phase (between 0-1, multiplied by the sample rate, aka 44100) is reading out individual sample values from the array. As you shift the phase, you iterate over the values. This is the “audio-equivalent” of a for loop. The amplitude of the saw wave is becoming the length of the actual loop slice while the frequency of the saw wave becomes the actual pitch of the played back sound. The phase of the saw wave is the actual position in the sound sample. This method of using audio to control another audio is one of the beautiest underlying concepts of Pure Data and this concept can be extended well into other domains, as well.

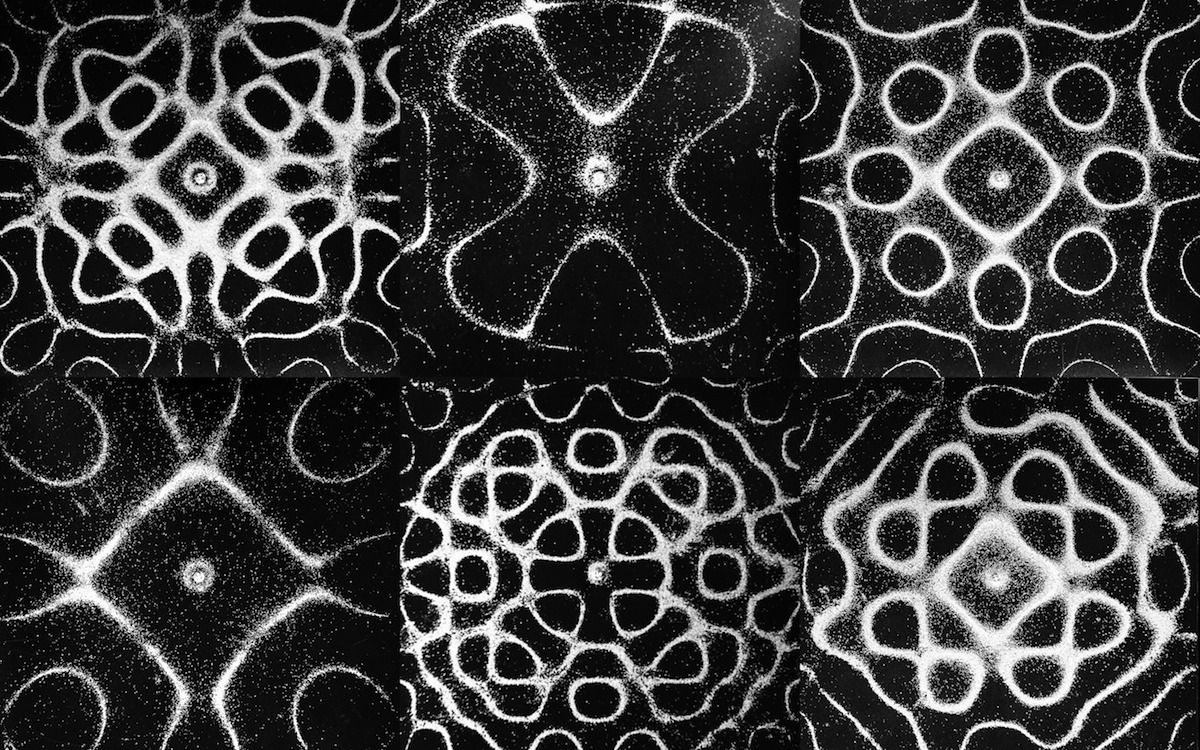

See these animations in movement here

The Accurate Timing example that is, this post might be about is trying to bring this concept into other languages where you can access the dsp cycle easily. With OpenFrameworks it is easy to call functions within this cycle. In fact, anything you put in ofSoundStream’s audioOut() function, will be called as frequently as your selected audio sampling rate. Usually this is 44.1 kHz, which means you can call your function 44100 times per second which might be way more sophisticated than making the call in an update() or draw() function. The audio thread is keeping time, even if your application’s frame rate is jumping around or dancing like a higgs. It might be useful to keep time based events in a separate, trustable thread, and the audio thread is just perfect for this occasion. This example is using a modified version of an oscillator class that is available at the AVSys Audio-Visual Workshop Github repository , which is used to control the events that are visible on the screen.

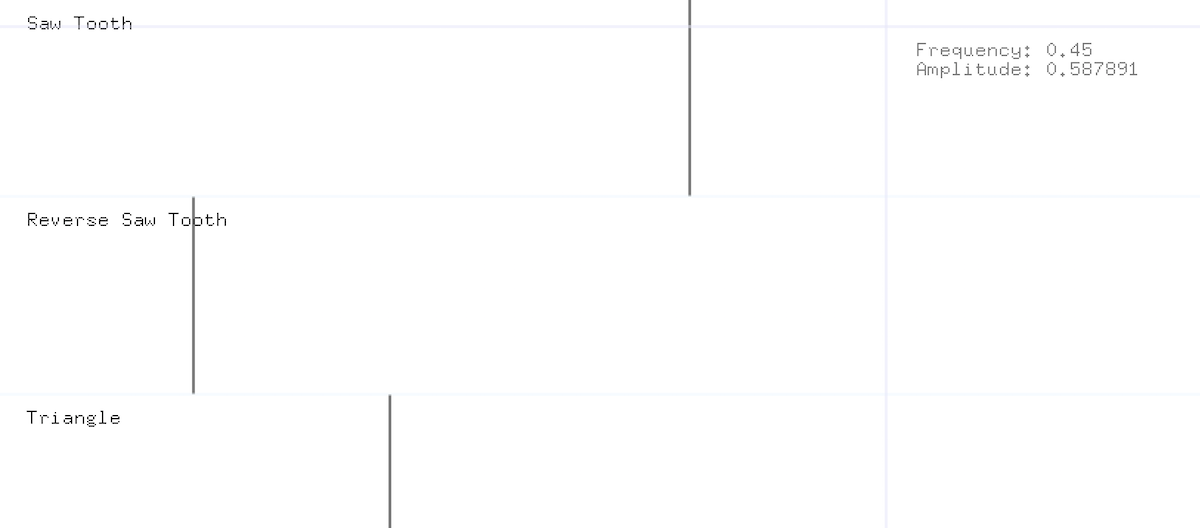

When building things where time really matters (such as sequencers, automated animations or other, digital choreography), a flexible, accurate “playback machine” can become really handy. The concept in this example is just a simple way of mapping one domain to another: the phase of a wave can be applied to a relative position of the actual animated value, while the amplitude of this wave represents the boundaries of the changing value. Finally, the frequency is representing the speed. Even though, there might be other really interesting ways of animating time sensitive artefacts, the one that is using the concept and attributes of sound (and physical waves) can be truly inspiring to develop similar ideas and connecting concepts between previously isolated domains.

The source for this post is available at: Audio Rate Animations on Github