Javascript Sound Experiments - Spectrum Visualization [ProcessingJS + WebAudio]

This entry is just a quick add-on for my ongoing sound related project that maybe worth sharing. I came up with the need of visualizing sound spectrum. You might find a dozen of tutorials on the topic, but I didn’t think that it is so easy to do with processingJS & Chrome’s WebAudio Api. I found a brilliant and simple guide on the web audio analyser node here . This is about to make the visuals with native canvas animation technique. I used the few functions from this tutorial and made a processingJS port of it. The structure of the system is similar to my previous post on filtering:

1. Load & playback the sound file with AudioContext (javascript)

2. Use a function to bridge native javascript to processingJS (pass the spectrum data as an array)

3. Visualize the result on the canvas

Load & playback the sound file with AudioContext (javascript)

We are using a simpler version of audio cooking within this session compared to the previous post. The SoundManager.js file simply prepares our audio context, loads and plays the specified sound file once the following functions are called:

initSounds();

startSound('ShortWave.mp3');

We call these functions when the document is loaded. That is all about sound playback, now we have the raw audio data in the speakers, let’s analyze it.

Use a function to bridge native javascript to processingJS

There is a function to pass the spectrum data as an array, placed in the head section of our index.html:

function getSpektra()

{

// New typed array for the raw frequency data

var freqData = new Uint8Array(analyser.frequencyBinCount);

// Put the raw frequency into the newly created array

analyser.getByteFrequencyData(freqData);

var pjs = Processing.getInstanceById('Spectrum');

if(pjs!=null)

{

pjs.drawSpektra(freqData);

}

}

This function is based on the built-in analyzer that can be used with the WebAudio API. the “freqData” object is an array that will contain all the spectral information we need (1024 spectral bins by default) to be used later on. To find out more on the background of spectrum analysis, such as Fourier transformation, spectral bins, and other spectrum-related terms, Wikipedia has a brief introduction.

However we don’t need to know too much about the detailed backgrounds. In our array, the numbers are representing the energy of the sounds from the lowest pitch to the highest pitch in 1024 discrete steps, all the time we call this function (it is a linear spectral distribution by default). We use this array and pass to our processing sketch within the getSpectra() function.

Visualize the result on the canvas

From within the processing code, we can call this method any time we need. We just have to create a JavaScript interface to be able to call any function outside of our sketch (as shown in the previous post, also):

interface JavaScript

{

void getSpektra();

}

// make the connection (passing as the parameter) with javascript

void bindJavascript(JavaScript js)

{

javascript = js;

}

// instantiate javascript interface

JavaScript javascript;

That is all. Now we can use our array that contains all the spectral information by calling the getSpectra() function from our sketch:

// error checking

if(javascript!=null)

{

// control function for sound analysis

javascript.getSpektra();

}

We do it in the draw function. The getSpectra() is passing the array to processing in every frame, so we can create our realtime visualization:

// function to use the analyzed array

void drawSpektra(int[] sp)

{

// your nice visualization comes here...

fill(0);

for (int i=0; i<sp.length; i++)

{

rect(i,height-30,width/sp.length,-sp[i]/2);

}

}

As usual, you can download the whole source code from here as well as try out before using it with the live demo here. Please note, the demo currently works with the latest stable version of Google Chrome.

Javascript Sound Experiments - Filtering [ProcessingJS + WebAudio]

I am working on a sound installation project where we are building our custom devices to measure distance (using wireless radiofrequency technology) & create sounds (using DAC integrated circuits with SD card storage, connected to a custom built analogue filter modul) through simple electronic devices. The building of the hardware with Marton Andras Juhasz is really exciting and inspirative in itself, not to mention the conceptual framework of the whole project. More hardware related details are coming soon, the current state is indicated on the video below:

The scope of this entry is about to represent filtered audio online, natively in the browser. I have to build an online representation of the system where the analog hardware modules can be seen and tried out within a simplified visualization (that is mainly to indicate the distances between the nodes in the system). Apart from the visualization, sound is playing a key role in the online representation, so I started playing with the Web Audio API , where a simple sound playback can be extended with filtering, effecting using audio graphs. The state of web audio might seem a bit confusing at the moment (support, browsers compatibility etc). To minimize confusion, you can read more about the Web Audio API here.One is for sure: the context of web audio is controlled via JavaScript, so it can fit well into any project that is using high level JavaScript drawing libraries, such as ProcessingJS.

The basic structure that we do here is

1. Load & playback soundfiles

2. Display information, create visual interface

3. Use control data from the interface and drive the parameters of the sounds

Load & Playback soundfiles

I found a really interesting collection of web audio resources here that are dealing with asynchronous loading of audio, preparing their content for further processing by storing them in buffers. Sound loading and storing in buffers is all handled with the attached Javascript files. If you need to switch sounds later, you can do it asynchronuously by calling the loadBufferForSource(source to load, filename) anytime anywhere on your page.

Display Information, create visual interface

Connecting your processingjs sketch to the website can be done by following this excellent tutorial. You should make functions in your Javascript that are “listening” to processingjs events. You can call any function that can accept any parameters. One important thing is that you have to actually connect the two systems by instantiating a processingjs object as the following snippet shows:

var bound = false;

function bindJavascript()

{

var pjs = Processing.getInstanceById('c');

if(pjs!=null)

{

pjs.bindJavascript(this);

bound = true;

}

if(!bound) setTimeout(bindJavascript, 250);

}

bindJavascript();

We have some work to do in the processingjs side, also:

// create javascript interface for communication with functions that are "outside"

// of processingjs

interface JavaScript

{

void getPointerValues(float x, float y);

void getFilterValues(float filter1, float filter2);

void getGainValues(float gain1, float gain2);

}

// make the connection (passing as the parameter) with javascript

void bindJavascript(JavaScript js)

{

javascript = js;

}

// instantiate javascript interface

JavaScript javascript;

Use control data from the interface and drive the parameters of the sounds

After connecting the html page with our sketch it is possible to use any action in the sketch to control our previously loaded sounds. This way we separate the whole audio engine (handling, storing, etc) from the visual interface (the processingjs canvas with rich interactive contents) by linking the two with a few functions to pass some parameters. These binding functions might remind someone to the OSC way of connecting of two softwares where functionalities are staying clearly isolated within the different systems, only the parameters are traveling between them represented as numbers, labels or other types of symbols.

This example at the moment works only with Chrome (new stable releases). According to the developer docs it should work with the new Safari (WebKit) browser but I couldn’t make it. Hope this will be fixed soon…

Try out the LIVE DEMO (you need latest stable Google Chrome

)

Download full source code (zip) from here

Javascript Procedural Sound Experiments: Rain & Wind [ProcessingJS + WebPd]

If working with sound on the web, it is common to use sound samples for events in games or rich content websites, but it is rarely possible to make procedural sound synthesis for different situations. In games there are often easier to use artificial, procedural sounds for rain, glass breaking, wind, sea etc. because of the ease of changing their parameters on the fly. I was really excited when I discovered a small project called WebPd that is about to port the Pure Data sound engine to pure JavaScript and make it possible to use directly on websites without plugins. This script is initiated by Chris McCormick and is based on the Mozilla Audio Data API that is under intense development by the Mozilla Dev team: at the moment it is working with Firefox 4+.

Also - being a small project - there are only a few, very basic Pd objects implemented at the moment. For a basic sound synthesis you can use a few sound generator objects with pd (such as osc~, phasor~, noise), but there is no really timing objects (delay, timer, etc) so all event handling must be solved within the Javascript side and only triggered synthesis commands can be left for the Pd side. If you are familiar developing projects with libPd for Android, or ofxPd for OpenFrameworks, you may know the situation.

So here I made a small test composition of a landscape that has some procedural audio elements: rain and wind. The rain is made with random pitched oscillators with very tiny ADSR events (which is in fact only A/R event that is called within the Javascript code and the actual values are passed directly to a line~ object in Pd). Density of the rain is changing with some random factor, giving a more organic vibe for the clean artificial sounds. The wind is made with a noise object that is filtered continuously with a high pass filter object. The amount of filtering is depending on the size (area) of the triangle that is visible in the landscape as an “air sleeve”.

If you would like to create parallel, polyphonic sounds, it might be a good idea to instantiate more Pd classes for different sound events. When I declared a single Pd instance only, not all the messages were sent through the Pd patch: messages that I sent in every frame (for continuous noise filtering of the ‘wind’) ‘clashed’ with discrete, separate messages that were sent when the trigger() function has been called for the raindrops. So I ended up using two Pd engines at the same time in the setup():

pd_rain = new Pd(44100, 200);

pd_rain.load("rain.pd", pd_rain.play);

pd_wind = new Pd(44100, 200);

pd_wind.load("wind.pd", pd_wind.play);

This way, accurate values are sent to each of the pd engines. try out the demo here (you need Firefox 4+)

For user input and visual display I am using the excellent ProcessingJS library which makes possible to draw & interact easily with the html5 canvas tag directly using Processing syntax. The response time (latency) is quite good, sound signals are rarely have drop outs, which is really impressive if you have some experiments with web based sound wave generation.

Permutation

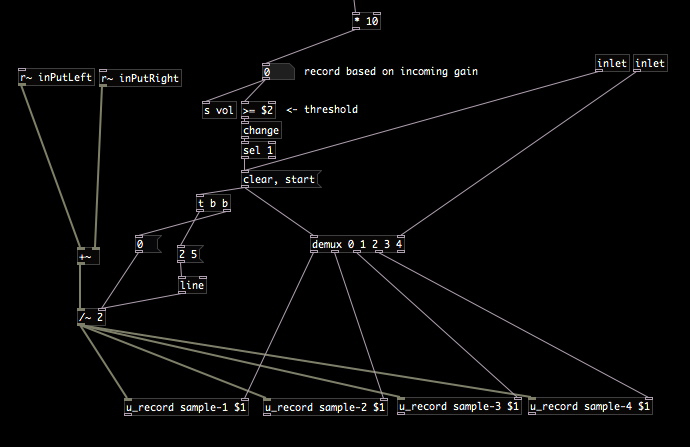

Permutation is a sound instrument developed for rotating, layering and modifying sound samples. Its structure is originally developed for a small midi controller that has a few knobs and buttons. It is then mounted on a broken piano, along several contact microphones. The basic idea of using a dead piano as an untempered, raw sound source is reflecting to the central state of this instrument in the context of western music during the past few hundred years and so. Contact mikes, prepared pianos as we know are representing a constant dialogue between classical music theory and other more recent free forms of artistic and philosophical representations. The aim of Permutation however is a bit different from this phenomenon. It is a temporal extension of gestures made on the whole resonating body of a uniquely designed sound architecture (the grand piano). In fact it is so well designed that it used to be as a simulation tool for composers to mimic a whole group of musicians. A broken, old wood structure has many identical sound deformations that it becomes an intimate space for creating strange and sudden sounds that can be rotated back and folded back onto itself.

The system is built for live performances and had been used for making music for the Artus independent theatre company. The pure data patch can be downloaded from here. Please note it had been rapidly developed to be used with a midi controller so the patch may confuse someone. The patch contains some code from the excellent rjdj library at Github.

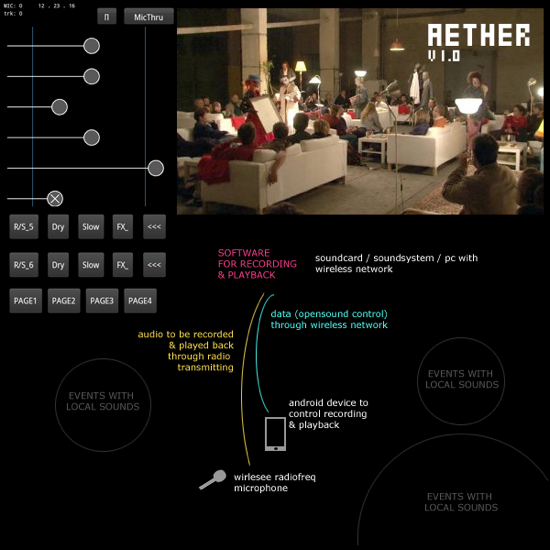

Aether

Aether is a wireless remote application developed for collecting spatially distributed sound memories. Its aim is to collect and use sound events on the fly. The client interface is shown on the left, the performance environment we are using it in can be seen on the right, a diagram of the setup is on the bottom of the image.

While walking around a larger space (that is coverable by an ordinary wireless network) one can send / receive realtime data between the client app and the sound system. All the logic, synthesis, processing is happening in the network provider server (a laptop located somewhere in space) while control, interaction and site-specific probability happens on the portable, site dependent device. The system was used for several performances of the Artus independent theatre company where action, movement had to be recorded and played back immediately.

The client app is running on an Android device that is connected to a computer running Pure Data through local wireless network (using OSC protocol). The space for interaction and control can be extended to the limits of the wireless network coverage and the maximum distance of the radio frequency microphone and its receiver. The system had been tested and used in a factory building up to a 250-300 square meters sized area.

The client code is based on an excellent collection of Processing/Android examples by ~popcode that is available to grab from Gitorious.

The source is available from here (note: the server Pd patch is not included hence its ad-hoc spaghettiness)