Animating in the Audio Domain [sound, code, synesthesia]

There are several situations when accurate event scheduling is needed. In a few of my recent projects, i was facing a problem continuously: how to keep things synched and launch events and animations really accurately. These projects are mainly audio based apps, so timing has become a key problem. An example is the case of sequencing the sounds in Shape Composer, where different “playheads” with different speeds and boundaries are reading out values from the same visual notation, while the user modifies them. It is not that simple to keep everything synched even when the user changes all the parameters of a player.

As I am arriving to native application development and user interface design from the world of visual programming languages, i decided to port a few ideas and solutions that worked for me really well, this post is one of the first in these series. In data flow languages for rapid prototyping, there are a lot of interesting unique solutions, that might also be useful in text based, production-ready environments (especially for those people who have mindsets that are originating from non-text based programming concepts). A nice example should be the concept of spreads in vvvv , or audio rate controls in Pure Data . In pure data, the name says all: anything is data, even the otherwise complicated operations on the audio signals can be used easily in other domains (controlling events, image generation, simulations, etc). While using the [expr~] object , one can operate audio signal vectors easily, but there is also a truly beautiful concept on playing back sounds: you read out sound values from a sound buffer using another sound. The values of a simple sound wave are used to iterate over the array.

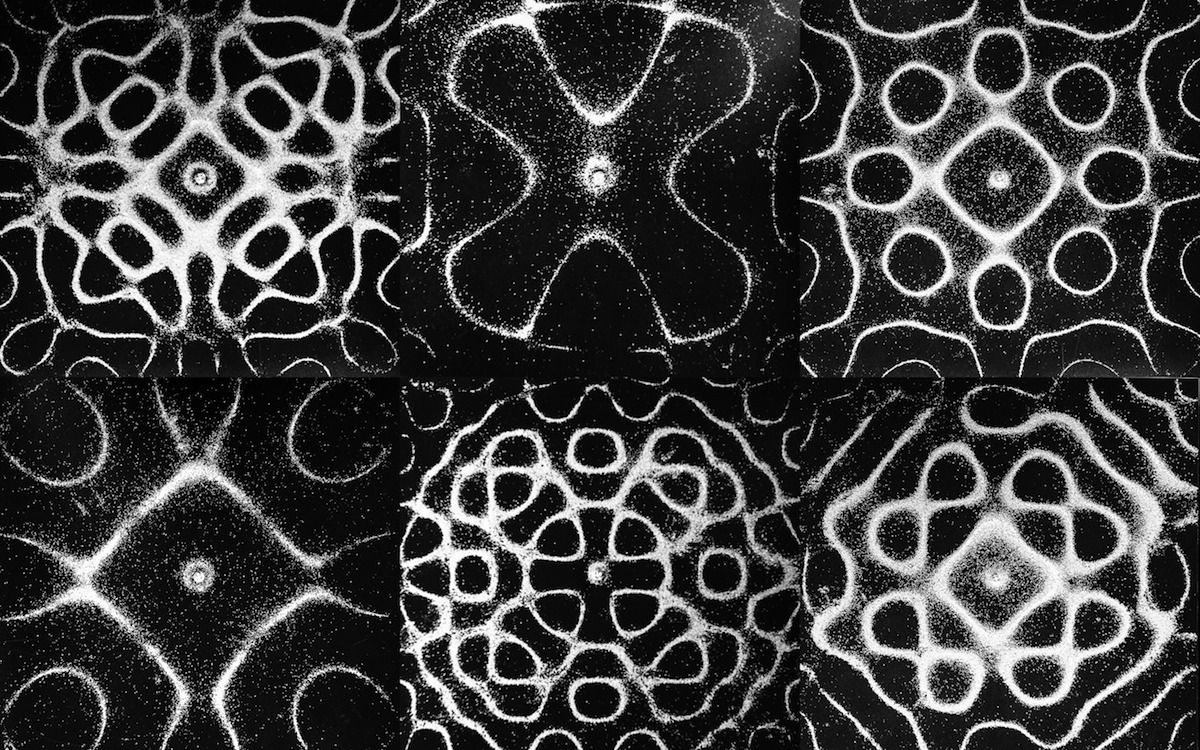

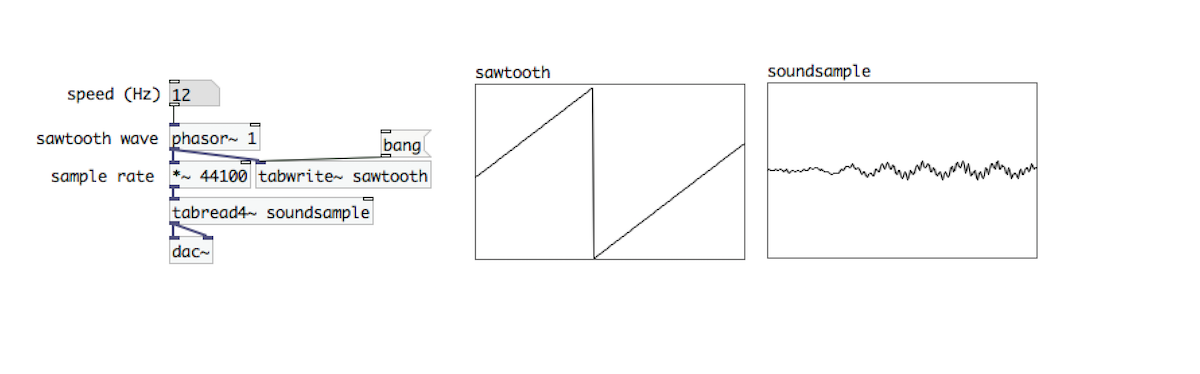

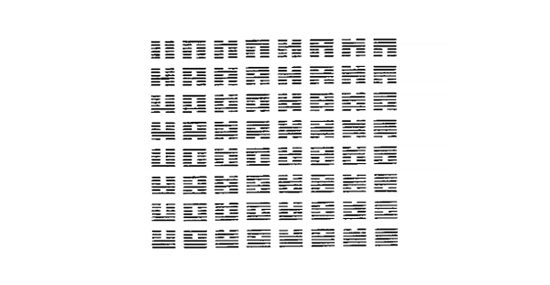

On the image above, there is an example how to use a saw wave, a [phasor~] object to read out values from an array using the [tabread4~] object. The phase (between 0-1, multiplied by the sample rate, aka 44100) is reading out individual sample values from the array. As you shift the phase, you iterate over the values. This is the “audio-equivalent” of a for loop. The amplitude of the saw wave is becoming the length of the actual loop slice while the frequency of the saw wave becomes the actual pitch of the played back sound. The phase of the saw wave is the actual position in the sound sample. This method of using audio to control another audio is one of the beautiest underlying concepts of Pure Data and this concept can be extended well into other domains, as well.

See these animations in movement here

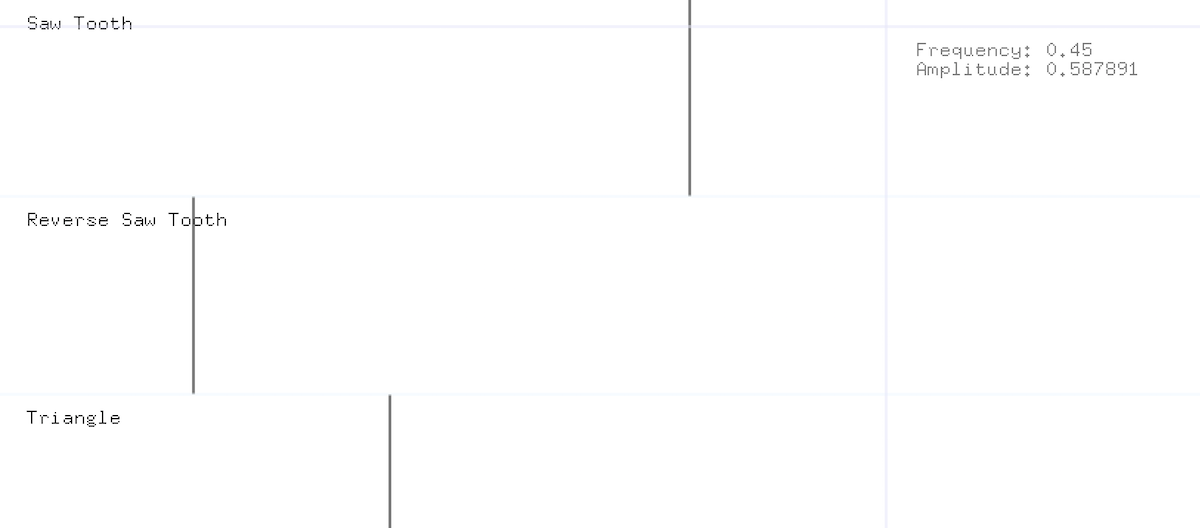

The Accurate Timing example that is, this post might be about is trying to bring this concept into other languages where you can access the dsp cycle easily. With OpenFrameworks it is easy to call functions within this cycle. In fact, anything you put in ofSoundStream’s audioOut() function, will be called as frequently as your selected audio sampling rate. Usually this is 44.1 kHz, which means you can call your function 44100 times per second which might be way more sophisticated than making the call in an update() or draw() function. The audio thread is keeping time, even if your application’s frame rate is jumping around or dancing like a higgs. It might be useful to keep time based events in a separate, trustable thread, and the audio thread is just perfect for this occasion. This example is using a modified version of an oscillator class that is available at the AVSys Audio-Visual Workshop Github repository , which is used to control the events that are visible on the screen.

When building things where time really matters (such as sequencers, automated animations or other, digital choreography), a flexible, accurate “playback machine” can become really handy. The concept in this example is just a simple way of mapping one domain to another: the phase of a wave can be applied to a relative position of the actual animated value, while the amplitude of this wave represents the boundaries of the changing value. Finally, the frequency is representing the speed. Even though, there might be other really interesting ways of animating time sensitive artefacts, the one that is using the concept and attributes of sound (and physical waves) can be truly inspiring to develop similar ideas and connecting concepts between previously isolated domains.

The source for this post is available at: Audio Rate Animations on Github

Accidental Calculus [Nature, Code, Design]

What are the similarities between ancient arabic systems for automation, origami folding, poetic code, adaptive design, legacy of John Cage or western music tradition including gameflow-like dice music pieces from Johann Philipp Kirnberger or Wolfgang Amadeus Mozart? All of these examples are representing a kind of relation between the logic of the mind and some behavioral aspect of our external environment. On one hand, these activities and artists are bringing forward some aesthetic decisions where creative minds let independent elements into the flow of the creative process to take part in the creation of the piece. Hereby the designer is defining frameworks and boundaries for semi-autonom processes which will lead to the evaluation of the final piece. On the other hand these fundamental processes are not only aesthetic decisions, but ways of thinking about our environment and about ourselves. These are borrowing inspiration from scientific, philosophic and mystic traditions that can be applied to many different fields and phenomena.

When dealing with formalized systems we always have to follow the rules of some procedure. Frameworks are usually constructed of some basic set of rules which might lead to complex structures while iterating and repeating these instructions. These simple set of repetitive rules (or algorithms) are applied to achieve different goals in different contexts and situations (think of any language, for example). Many people like to think that randomness adds value and complexity to their systems because we can not predict its behavior so it will surprise the observer with ever changing, never repeating outputs and combinations. According to a recent interview with Michelle Whitelaw (can’t find the direct link but it has appeared in Neural), it is true in terms of variability, but it is not about adding qualitative values for a system. Random events are really useful for simulating chunks of data for unpredictable behaviors: when we need to simulate unpredictable input values for a system for further investigation. Let’s observe three very basic distribution model.

fig. 1 - Random probabilities. Left to right: a ) no random factor, linear steps. b ) random walk with small amount of randomness. c ) random walk with increased randomness. d ) full range random steps

If we know the parameters of our goal exactly, we can get there by very strict and precise instructions easily. Say, we have a point on a plane, we can get there if we add a simple vector from our starting point to the direction of the given location. Simple & solved. If we know only one parameter of our point we can converge into the desired location by adding some random probabilities to our system. This will be less effective but we will achieve the goal sooner or later. Does it mean if we don’t know any of the coordinates of our point shall we use totally random distribution? Well, not really, because a totally random distribution allows repetition (the following states are not depending on their previous ones) so maybe we will never reach the goal. If we really would like to reach our goal, we still need to use some semi random algorithm where we combine order with chaotic behavior. This can be implemented several ways. We can use some kind of basic random walk algorithm which is not very effective, or we can use very sophisticated path finding algorithms that are way more useful. Or, we can use a straight, ordered algorithm with no random probability like a television scan line when its cathode ray is moving from point to point over each line, sequentially. Or, we can generate random numbers and avoid previously generated ones so finally we will stumble in the position of our point. There are many-many ways to solve a problem. What we chose might depend on the context of the situation (how much time do we have, how precise do we need to be, etc).

Specific distribution models are easily observable in our regular daily routines, also. The temporal distribution of our activities are more or less predictable if we find some patterns and repetitive occurrences between successive events. Hungarian scientist Laszlo Barabasi-Albert found an interesting model that can describe key aspects of our behaviors. He calls these successive events “bursts”. He and his colleagues were observing how frequently people respond to mails, talk to each other or even how often move between two locations. They have found that there are surprisingly big gaps and silence between two active events. It’s obvious that we do not speak for a few hours, then we meet someone and talk a lot. We do not read mails for a few days than we respond all to them within a few hours. Our basic communication structure is working like this. Similarly, but less obviously moving between physical locations are showing bursts and calm series, too. It is not a constant average value how much we travel. If you go to vacation, you move a lot, then for several months you are just moving locally between the places of your regular life. This concept can be extended even to our regular routines, relationships and consumer behaviors.

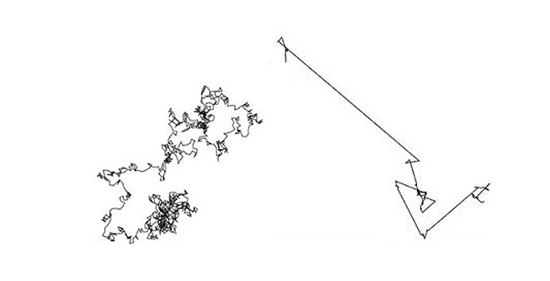

fig. 2 - Brownian motion (left), Levy Flight (right)

This distribution model is a well known behavior in other natural processes and has the name Levy Flight. According to the blog Seeing Complexity:

“Lévy flights are described by a random walk, which is more or less any mathematical expression of a trajectory with successive random steps, distributed with a non-exponentially bounded probability distribution with theoretically infinite variance. So what does that mean? It is a description of motion. Take as a contrast Brownian motion, which is modeled after random movement of particles in a fluid.A Lévy flight is instead a cluster of movements, some short and some long. It was identified by the late Benoit Mandelbrot, as an outgrowth of chaos theory.”

Why are these facts important from a designer point of view? Think of procedures. Our regular routines are constructed subconsciously from trivial procedures. Our current states are more or less predictable. And if we deal with this potential we might build tools that are easier to understand, that are adapting themselves to a personalized usage. An object that is capable to measure its environment and change its internal structure based on these principles can be adapted more seamlessly and easily to someone’s internal representations. This might lead to some adaptive, invisible interfaces where there is no UI or Graphic Design anymore, but something else. Something, which deals with rhythm, temporality, routines and changing interests, fluid contexts and self regulating algorithms. One might call it contextual design, behavior design or anything that is brought forward by some sort of dynamic nature. Observing rhythms around (and inside) us, temporal sequences, music, dance, human relationships is a key element for asking the right questions within these unexplored territories. Software has meaningful dynamic inputs and deeply reconfigurable internal representations so all type of software can adapt its internal structure according to its environment. Software must be open not only in the regular meaning which refers to its source but also in terms of its behavior. Think of responsive design, reactive architecture, or any open ended process from the art world.

As Bret Victor, a great interventionist and interface designer points out: interaction is good when dealing with manipulation software (editors, games, etc). But once we are dealing with information software where we are more likely to learn instead of create, interaction is turning into interruption. An interface that represents information should work with as less interaction as possible, in order to let the perceptual and cognitive flow go seamlessly. These software might use environmental conditions, reconfigure their behavior when needed. This restructuring is based on some data from the users history, actual physical position, current time and other environmental parameters, but also includes social integration, similar interests and rankings from relevant people etc. The interface must be in real conversation with the context of the user. This type of meaningful relationship is originally described by Gordon Pask who adapted human conversational elements when he was dealing with artificial, responsive input & output systems that took form within (and out of) the fields of cybernetics.

fig. 3 - One sequence of the 64 hexagrams of the I Ching, or the Book of Changes. This ancient system is a method of divination based on a binary system and chance operations.

What are the strategies that we can observe in procedural thinking and algorithmic art? Janett Zweig introduces three basic concepts that she found while studying mystical systems, procedural art and computer practices. In her work “Ars Combinatoria” (pdf link) she makes difference between permutation, combination and variation. These three are formalized concepts for manipulating an existing set of entities (numbers, musical sequences, words etc). Permutation is when we have our set and rearranging its elements in different ways without adding or repeating any new element. Combination is to rearrange and select specific elements, reducing its original set. Making variations means to rearrange the elements of the system with the allowance of repetition, redundancy and multiplication. Her interest in these methods are going far more beyond practical definitions: she is investigating the difference between spiritually based or purely process based methods. She is asking when someone is making a mystical experience via permuting the letters of the alphabet (as with the gates of Sefer Yetzirah or the ancient representations of the Tree of Life) or permuting abstract binary symbols (such as with the I Ching system or modern computer programs), is it a creative transformation, or more like a meditative activity?

According to Zweig these procedural systems went through a qualitative shift from a purely mystical state to a more formal, process based methodology during our history. Starting with great “Mystical Universalist” systems, thinkers and philosophers introduced “Symbolic logic” and logical games which led to different “Semantic Interventions”. These are forming the basis for the concept of “Play” and playfulness that can be found in a wide spectrum of our activities including board games, contemporary music, educational systems.

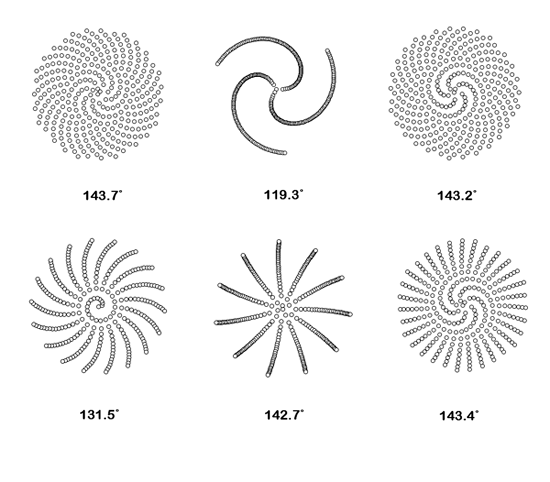

fig. 4 - Spiral experiments: Generating Fermat (or Archimedean) spirals with modified divergence angles.

What happens if we are using a purely ordered algorithm (such as a formula for a spiral) with no random probabilities? Can we predict the result? It turns out that it is far away from the truth, at least in terms of visual representation. As you see on this visual experiment, each shape shows different characteristics compared to each other. A spiral shape is always present because of the clearly defined, ordered algorithm (r*r) = (a*a)*θ. However as soon as you start tweaking one single parameter a tiny bit (in this case, the parameter of the divergence angle of the system which is variable”a” in the code below) completely different spatial distributions arise. An initial value is repeated and iterated over itself within the loop, thus it has a multiplicated effect which brings forward emerging shapes of visual complexity. A simple implementation in Processing language:

float a; // divergence angle

float c; // scaling factor

int N; // number of dots

float r, phi; // polar coordinates

int j, i; // row, column coordinates

for (int n=0; n<N; n++)

{

phi = n*a;

r = c*sqrt(1.0*n);

j = int( r*cos(phi) );

i = int( r*sin(phi) );

ellipse(j,i,10,10);

}

Addition: an ellipse() is a function to draw ellipses at specified positions with specified dimensions (these are the four parameters of the function). Also, you have to initialize the variables (a,c,N) to run the code.

Language is definitely a permutation of letters from the alphabet. However the meaning and the core concepts of our consciousness are not contained in the discrete elements or parameters and not even between the configurations of these elements which are defined by syntactical rules and grammatical formulas. If we are bringing forward the world and our environment from concepts that are inherited from language, it is more than just communication tool. It is the incubator of analogies that are the core working mechanisms for human thinking as Douglas Hofstadter points it out in his recent book named Surfaces and Essences. It is the landscape around and inside us (be it visual, textual or mental language), it is the transfer channel of memes, it creates the internal representation of the world around and inside us. Some might say: the music is not in the piano.

A Procedural Drawing Course [Code, Pen, Paper]

Procedural properties are ancient problem solving techniques in communication, art, crafts and all type of human activities. We can see its incarnations in everyday life, from beautiful handmade indian rangolis to formalised design practices, mathematics, geometry, music, architecture and even pure code language. This workshop is dealing with Procedural Drawing. Why procedural?

Rangoli, South India

Media and technology has an overwhelming relationship with our cultural society. Stream of events, speed of information sharing growing rapidly, it is hard to catch up with the latest directions of specific areas of tools, art and technology. New social contexts, cultural interactions arise day by day. These days designers should focus and reflect on the here and the now and have an overview on the current methodologies, design practices. We can always learn and adapt new, short term, overhyped technologies, but it is much harder to grasp the underlying phenomenons, ancient design decisions, the human factor. This workshop is about to catch these properties under the hoods.

The language of the workshop is pseudocode. There is no specific type, no predefined syntax, we do not rely on any computers, function libraries, we use only pens, lines, papers, logic, repetition, rules, process. We are following the path that many philosophers, engineers, inventors, mystics have been followed before us: think and draw.

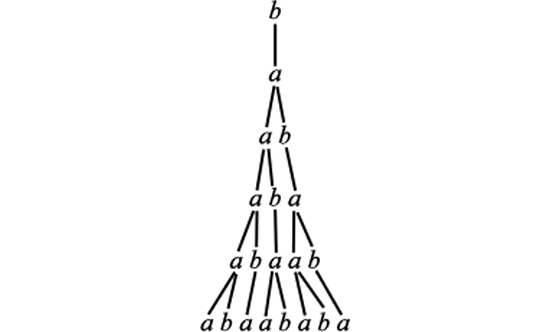

3d L-system made by Sebastien Parody

A very beautiful procedural example is the system made by Aristid Lindenmayer who was trying to describe complex biological and natural growth with a simple set of rules. This system is named after him. He also wrote a really inspiring book on “The Algorithmic Beauty of Plants” (pdf link). He wanted a way to test his theory about the growth pattern of a particular type of algae. His theory stated that the cells of this algae could be in one of two states: growth or reproduction. An algae in the growth state eventually grew into the reproduction state. An algae in the reproduction state eventually divided into two cells, one of which was in the growth state and the other in the reproduction state. Lindenmayer’s grammar system proved a fantastic method for proving his theory. What Lindenmayer could not have predicted is the incredible usefulness of his system in many other areas, both in biology and in mathematics.

Basic Diagram of the Lindenmayer System

A Lindenmayer grammar is fully defined by an initial axiom and a set of one or more transformation rules. The initial axiom consists of a “string” of characters (e.g., alphabetic letters, punctuation, etc.). Each transformation rule gives a set of characters to search for in an axiom, and a set of characters to replace the original characters with. Applying all of the transformation rules to the initial axiom produces a new axiom. The rules can then be applied to this second axiom to produce a third axiom. Applying the rules to the third axiom produces the fourth, and so on. Each application of the transformation rules is called an iteration of the grammar.

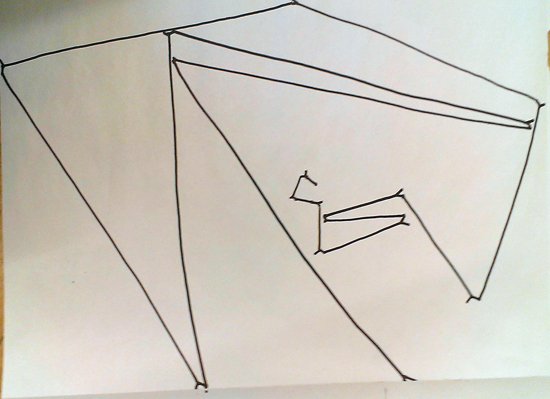

Pen Drawing from the Course: Marcell Torok gave instructions to synchronize your length of line with the time you are breathing in and breathing out

Another interesting aspect of procedural, algorithmic thinking is something that we can call the “stage-analogy”. As Rohid Gupta (Fadereu) refers to the analogies between stage, performance & programming: “As much as our machines, we have become the processors of information, but we do not read text and instructions line by line as computers do. We scan in less than a blink and get an overall visual sensory pattern - like the notes in a musical chord, or the Fourier Transform of a rich spectral signal from atomic emission, we break it down in a subconscious way.” Actors, performers are acting based on predefined set of rules, be it improvisational or fully, “strictly” followed algorithms.

Pen Drawing from the Course: Attila Somos was experimenting on how to formalize & define recursion

Visual arts started to adapt these concepts with the rising of the Dada movement in first decades of the twentieth century: artists, performers were giving instructions, pseudo codes for the interpreting (compiling) their piece. Like musical notations, which are originally interfaces for representing different events that are distributed in time, drawings, visual arts also reached a state where the liveness or the actual “happening” of the concept is separated from the thoughts aka the software. Fluxus in the sixties, conceptual artists, Sol Lewitt, Miklos Erdely with his creative drawing workshops and conceptual works also continued the tradition.

This tradition is widely used and democratized today, since we are working with software separated from hardware, we can find this concept embed very deep in our everyday life. You can see all the works (rules are in hungarian) from the course here. The workshop was held at MOME Media Design, 2013.

SlitScan_JS [Webcam, ProcessingJS]

Slitscan, or “slit-scan photography” refers to cameras that use a slit, which is particularly used in scanning cameras in panoramic photography. It has many implementations with different hardware & software languages among many artistic and technological contexts. As I am currently working on a project that is involved some aspects of this technique (but extended into something totally unusual and strange, bit more about it later…), I just hooked together a quick hack of the recently introduced media API for users webcam and microphones and combine it with processingjs to make life easier.

In fact the getUserMedia() query can be used in a lot wider context than only within this example. Since we can access all pixels within the live video feed using javascript, it is becoming lot easier to build motion tracking, color tracking and other computer vision based sensing technologies for plugin free, open web based applications. Here it is a really simple example which is calling the loadPixels() function of processing so each pixel can be set and replaced within the image. As a simple result we are placing ellipses with the corresponding color values of the pixels on the screen.

You can try out the working demo (tested & working with Google Chrome)

Grab the source from Github

PolySample [Pure Data]

I’ve been involved in a few projects recently where simultaneous multiple sound playback was needed. Working in the projects Syntonyms ( with Abris Gryllus and Marton Andras Juhasz ), SphereTones ( with Binaura ), and No Distance No Contact ( with Szovetseg39 ) repeatedly brought me the concept of playing overlapping sound samples. This means when you need to playback a sample and start playing it back again, before the previous instance has been finished. The Pure Data musical environment is really useful for low level sound manipulation, can be tweaked towards specific directions. I was searching the web for some solutions, but still didn’t find a really simple polyphonic sound sampler with adjustable pitch that is capable to play back multiple instances of the same sound if needed. For instance if you need to play long, resonating, vibrating sounds, it is useful to let the playback of the previous one finishing, so the next sound doesn’t interrupt it, but there are two instances of the same sound, both of them left decaying smooth & clear. Like raindrops or percussions, etc.

As a starting point I found myself again in the territories of dynamic patching. As you see on the video ( which is an older pd experiment of mine ) there are things that can be left to pd itself. Creating, deleting, connecting objects can be done quite easily by sending messages to pd itself. With PolySample, once the user creates a [createpoly] object, it automatically creates several samplers in itself. The number of generated samplers can be given as a second argument to the object. First argument is the name of the sound file to be played back. There is an optional third argument for changing the sampling rate ( which is 44100 by default ). It is useful when working with libPd and the like, where different devices and tablets operate on a lower sampling rate. So creating a sampler can be invoked as

[ createPoly < sound filename > < numberOfPolyphony > < sample rate > ]

The first inlet of the object accepts a list: the first element is the pitch, the second element is the volume of the actual sample to be played. This is useful when trying to mimic string instruments or really dynamic range of samples. This volume sets the initial volume of each sample ( within the polyphony ), whereas the second inlet accepts a float for controlling the overall sound, as a mixer.

You can create as many polyphonic samplers as you wish ( keep in mind that smaller processors can be freaked out quite quickly if you create tons of sample buffers at the same time ). The project is available at Github, here.

Built with Pd Vanilla, should work with libPd, webPd, RJDJ and other embeddable Pd projects.